AI Infrastructure

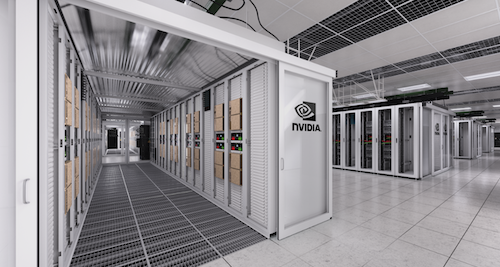

AI infrastructure management (e.g., DGX B200, DGX H200) involves orchestrating large-scale computing resources to efficiently train and deploy AI models, particularly large language models (LLMs) like LLaMA, Grok, Mistral, and other transformer-based architectures. We are currently working in three main areas:

- Performance analysis focuses on optimizing computation graphs, memory usage, and parallelization strategies, such as tensor and pipeline parallelism, to maximize efficiency using profiling tools (e.g., NVIDIA Nsight Systems and Nsight Compute), monitoring, and observability (e.g., NVIDIA DCGM).

- Optimization looks at efficient inter-GPU and inter-node communication strategies, such as NVLink, RDMA, and high-bandwidth networking to minimize bottlenecks in distributed training.

- Benchmarking frameworks, such as MLPerf, TPCx-AI, and DAWNBench play a crucial role in evaluating the performance of these models across different hardware configurations, measuring throughput, latency, and power efficiency under various workloads.

- Reliability ensures fault tolerance and automated recovery mechanisms to maintain high availability in AI clusters. By using system design patterns, load balancing, checkpointing, and failure management, downtime can be minimized.

Key challenges include optimizing resource utilization, scaling communication strategies, establishing standardized benchmarks, and enhancing fault tolerance with proactive anomaly detection and self-healing mechanisms for more efficient, scalable, and resilient AI clusters.

ML for Systems

For hyperscalers, such as Huawei Cloud, the Operation and Maintenance (O&M) of cloud infrastructures and platforms cannot be done any longer manually or simply with off-the-shelf solutions. It requires self-developed automated systems, ideally exploiting the use of AI to provide tools for autonomous cloud operations.

HC, or Huawei Cloud, has a microservices architecture composed of hundreds of services. They are distributed over thousands of hosts in many geographical regions and operate with an availability higher than five nines. Huawei Cloud is one of the largest and fastest growing platforms in the world. It has a strong presence throughout the world with over 40 availability zones located across 23 geographical regions, ranging from Germany, France, South/Central America, Hong Kong and Russia to Thailand and South Africa.

The objective of the AIOps / Reliability Team (based in Munich, Germany) was to develop new systems and tools to analyze observability data from Huawei Cloud to detect problems which impact customers, identify the root cause within seconds, and fix failures using the 1/5/10 rule (detection: 1 min, RCA: 5 min, recovery: 10 min). We generally build tools for anomaly detection, root-cause analysis, performance analysis, predictive maintenance, security operations, and operations automation for Cloud and intelligent management (1):

- Security Operations: SecOps integrates monitoring, tools, processes, and technology to keep IT secure while reducing risk.

- Intelligent Log Analysis: Explore the use of structured logging to facilitate the application of AI/ML methods for Root-cause analysis

- Hypervisor Reliability: Identifying health issues of hypervisors correlated with latent failures.

From 2015 to 2024, we used AI from the fields of Data Science, Machine Learning, and Deep Learning, including statistical learning, time-series analysis, deep learning, big data, streaming, and data visualization, enabled us to develop new production-ready services for troubleshooting Huawei Cloud and detect issues which were previously undetectable.

(1) Technical University of Berlin (TUB)

AI for Operations

We developed several cutting-edge tools and solutions focused on failure prediction, failure prevention, and anomaly detection to enhance the operation of cloud infrastructures. By leveraging advanced machine learning algorithms and data analytics, we enabled HUAWEI CLOUD operators to anticipate potential issues, optimize system performance, and ensure the reliability and resilience of the cloud infrastructure.

Observability: We enhanced HUAWEI CLOUD Cloud Monitoring Service used to monitor and manage the performance, health, and security of global cloud infrastructures using machine learning.

- Multi-source Distributed System Data for AI-Powered Analytics, ESOCC 2020, 2020.

- Observing and Controlling Performance in Microservices

- Towards Occupation Inference in Non-instrumented Services, NCA, 2019.

- On Black-Box Monitoring Techniques for Multi-Component Services, NCA, 2018

- Self-Supervised Log Parsing. ECML-PKDD, 2020. (Rank: A)

Failure Prevention: We enhanced the global, decentralized, and scalable HUAWEI CLOUD Cloud Log Service to collect, analyze, and manage petabytes of logs and event data generated by the cloud infrastructure and on-premises systems.

- QuLog: Data-Driven Approach for Log Instruction Quality Assessment, IEEE/ACM ICPC, 2022. (Rank: A)

Failure Prediction: We developed new systems for HUAWEI CLOUD datacenters to predict the failure of HDD, SDD, RAM, and Optical network transceivers using Machine Learning.

- Exploring Error Bits for Memory Failure Prediction, ACM/IEEE ICCAD, 2023. (Rank: A)

- HiMFP: Hierarchical Intelligent Memory Failure Prediction. IEEE DSN, 2023. (Rank: A)

- An Optical Transceiver Reliability. IEEE/ACM CCGrid, 2023. (nominated best paper)

- Online Memory Leak Detection in the Cloud-based Infrastructures, AIOPS 2020, 2020.

- Managing Large-Scale Edge Cluster Over Unstable Network with KubeEdge, CNCF, 2021.

Anomaly detection: We build a distributed Cloud Trace Service for HUAWEI CLOUD to follow and profile the execution of public cloud services’ requests as they travel across multiple infrastructure services, components, middleware, and systems in a public and private cloud.

- Anomaly Detection and Classification using Distributed Tracing, IEEE CCGrid, 2019. (Rank: A)

- Anomaly Detection and Classification using Distributed Tracing and Deep Learning, IEEE/ACM CCGrid, 2019. (Rank: A)

- Anomaly Detection from System Tracing Data using Multimodal Deep Learning, IEEE Cloud, 2019. (Rate: 21%)

- Self-Supervised Anomaly Detection from Distributed Traces, UCC, 2020

- Automated Analysis of Distributed Tracing. Journal of Grid Computing, 2021, (IF: 4.67).

- Self-Attentive Classification-Based Anomaly Detection in Unstructured Logs, ICDM, 2020. (Rank: A*)

Cloud Reliability

From 2015 to 2020, we worked on improving the Reliability and Resilience of Huawei Cloud (HC) and Open Telekom Cloud (OTC), since in early days HC had a strong dependence on OpenStack.

We developed several new tools and systems based on:

- Fault-injection technologies to demonstrate that systems are robustness by injecting faults to damage internal components to test its fault tolerance.

- Distributed Tracing (So, you want to trace your distributed system? Key design insights from years of practical experience provides a very good overview of tracing systems)

- Root-cause Analysis (Efficient Failure Diagnosis of OpenStack, IEEE Internet Computing, 2018.)

The following presentations/lectures (1,2) provide an overview of our work on OpenStack and distributed tracing.

- Introduction: Hyperscalers, cloud monitoring, AI and O&M, monitoring formats, ML for O&M.

- OpenStack Cloud OS: Virtualization, public clouds, openstack system design, openstack services (IMS, compute, nova, scheduler, network, storage).

- OpenStack Hands-on: Setup infrastructure, install Openstack, CLI, launch instances, attach volumes, create networks, distributed tracing.

- Distributed Tracing Technologies: Workflow for VM creation, tracing concetps, tracing systems, Zipkin, Jaeger, OpenTracing, OSProfiler.

- Distributed Trace Analysis: Monitoring data sources, troubleshooting with tracing, feature selection, trace abstraction, time series analysis, sequence analysis. LSTM.

- Distributed Trace Analysis (Hands-on): Jupyter notebook with running code for dsitributed trace analysis for Openstack.

- Cloud Benchmarking: Benchmarking public cloud platforms, ECS and RDS benchmarking.

- Cloud Computing: Overview, concept, web APIs, platforms, applications, and BPM

(1) Technical University of Berlin (TUB), (2) Technical University of Munich (TUM)

Service Systems

Our contributions on service systems placed emphasis on three fields: service description languages (with the USDL family), service system modeling (with the LSS USDL language), service analytics (using process mining), and service networks (using principals from social networks).

-

Service Analytics. We analyse large logs from IT service provisioning (e.g., application logs, transactions, ITIL) to find behaviour patterns.

-

Service Descriptions. We developed the Linked USDL language (Unified Service Description Language) to describe services using computer-understandable specifications, formal ontologies (RDFS), and AI for inference.

-

Service Systems. We developed the Linked Service System model for the Unified Service Description Language (LSS-USDL) using lightweight semantic models to capture service systems.

We also explored the concept of service networks. The observation that the power of service-based economies is no longer restricted to individual organizations, but spans across networks, was the main driver for conducting service network research.

- Linked USDL Privacy: Describing Privacy Policies for Service, IEEE ICWS (Rank: A), 2018.

- Modeling Service Level Agreements with Linked USDL Agreement, IEEE TSC (IF: 3.049), 2017.

- Linked USDL Agreement: Effectively Sharing Semantic Service Level Agreements on the Web, IEEE ICWS, (Acceptance Rate: 17.4%), 2015.

- A framework for next generation e-health systems and services AMCIS, (Rank: A), 2015.

- Cloud Computing Automation: Integrating USDL and TOSCA. CAiSE (Rank: A), 2013.

- Service Systems Concepts, Modeling, and Programming. Springer 2014.

See Github LSS-USDL, Github Linked-USDL

We also explored the concept of Process Analytics. Our intentions are twofold. On the one hand, we think it is fundamental to survey findings from neighboring disciplines on how Business Process Quality Metrics can be developed. In particular, we believe that we can gather additional insights from software engineering, cognitive science, and graph theory and relate them to business process modeling. A further empirical investigation might ultimately lead to establishing a complexity theory of business process models. On the other hand, we plan to demonstrate that these metrics serves their purpose, we plan to carry out several empirical validations by means of controlled experiments.